docker 部署

更新时间:2026年1月23日 15:19

浏览:1947

部署 Qwen/Qwen2.5-32B-Instruct 示例

模型下载地址:

https://huggingface.co/Qwen/Qwen2.5-32B-Instruct

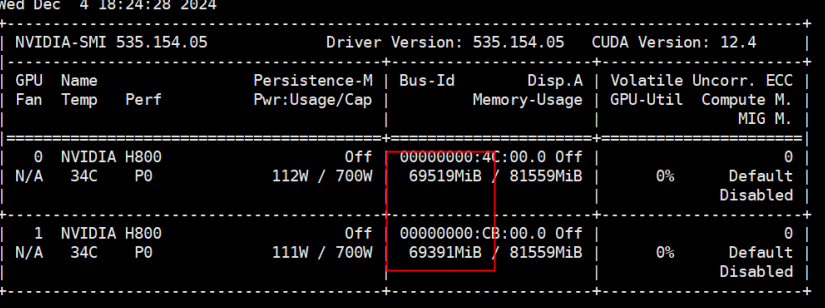

模型大小 61 G,需要 两张 A100|A800|H100|H800 80G

本地下载好模型,目录结构 Qwen/Qwen2.5-32B-Instruct

docker run \

--runtime nvidia \

--gpus all \

--shm-size 16G \

-v /model-data/path:/model-data \

-p 80:80 \

vllm/vllm-openai:v0.6.4 \

--host 0.0.0.0 \

--port 80 \

--model /model-data/Qwen/Qwen2.5-32B-Instruct \

--served-model-name Qwen/Qwen2.5-32B-Instruct \

--device cuda \

--tensor-parallel-size 2 \

--api-key xxx

说明

- -v /model-data 挂载目录,国内下载 huggingface 会超时失败, 需要手工下载好模模,

- —host 监听的主机名, 0.0.0.0 表示任意IP

- —port 端口号

- —model 模型名或路径,使用名称时从 /root/.cache/huggingface 加载,不存在时自动从 huggingface 下载

- —served-model-name 模型名,—model 为路径时,使用本参数指定模型名

- —device 设备类型

- —tensor-parallel-size 使用的显卡张数

- —api-key API key

显存占用

启动日志

INFO 12-04 18:20:56 api_server.py:585] vLLM API server version 0.6.4

INFO 12-04 18:20:56 api_server.py:586] args: Namespace(host='0.0.0.0', port=80, uvicorn_log_level='info', allow_credentials=False, allowed_origins=['*'], allowed_methods=['*'], allowed_headers=['*'], api_key='rjgf@2024', lora_modules=None, prompt_adapters=None, chat_template=None, response_role='assistant', ssl_keyfile=None, ssl_certfile=None, ssl_ca_certs=None, ssl_cert_reqs=0, root_path=None, middleware=[], return_tokens_as_token_ids=False, disable_frontend_multiprocessing=False, enable_auto_tool_choice=False, tool_call_parser=None, tool_parser_plugin='', model='/model-cache/Qwen/Qwen2.5-32B-Instruct', task='auto', tokenizer=None, skip_tokenizer_init=False, revision=None, code_revision=None, tokenizer_revision=None, tokenizer_mode='auto', chat_template_text_format='string', trust_remote_code=True, allowed_local_media_path=None, download_dir=None, load_format='auto', config_format=<ConfigFormat.AUTO: 'auto'>, dtype='auto', kv_cache_dtype='auto', quantization_param_path=None, max_model_len=None, guided_decoding_backend='outlines', distributed_executor_backend=None, worker_use_ray=False, pipeline_parallel_size=1, tensor_parallel_size=2, max_parallel_loading_workers=None, ray_workers_use_nsight=False, block_size=16, enable_prefix_caching=False, disable_sliding_window=False, use_v2_block_manager=False, num_lookahead_slots=0, seed=0, swap_space=4, cpu_offload_gb=0, gpu_memory_utilization=0.9, num_gpu_blocks_override=None, max_num_batched_tokens=None, max_num_seqs=256, max_logprobs=20, disable_log_stats=False, quantization=None, rope_scaling=None, rope_theta=None, hf_overrides=None, enforce_eager=False, max_seq_len_to_capture=8192, disable_custom_all_reduce=False, tokenizer_pool_size=0, tokenizer_pool_type='ray', tokenizer_pool_extra_config=None, limit_mm_per_prompt=None, mm_processor_kwargs=None, enable_lora=False, enable_lora_bias=False, max_loras=1, max_lora_rank=16, lora_extra_vocab_size=256, lora_dtype='auto', long_lora_scaling_factors=None, max_cpu_loras=None, fully_sharded_loras=False, enable_prompt_adapter=False, max_prompt_adapters=1, max_prompt_adapter_token=0, device='cuda', num_scheduler_steps=1, multi_step_stream_outputs=True, scheduler_delay_factor=0.0, enable_chunked_prefill=None, speculative_model=None, speculative_model_quantization=None, num_speculative_tokens=None, speculative_disable_mqa_scorer=False, speculative_draft_tensor_parallel_size=None, speculative_max_model_len=None, speculative_disable_by_batch_size=None, ngram_prompt_lookup_max=None, ngram_prompt_lookup_min=None, spec_decoding_acceptance_method='rejection_sampler', typical_acceptance_sampler_posterior_threshold=None, typical_acceptance_sampler_posterior_alpha=None, disable_logprobs_during_spec_decoding=None, model_loader_extra_config=None, ignore_patterns=[], preemption_mode=None, served_model_name=['Qwen/Qwen2.5-32B-Instruct'], qlora_adapter_name_or_path=None, otlp_traces_endpoint=None, collect_detailed_traces=None, disable_async_output_proc=False, scheduling_policy='fcfs', override_neuron_config=None, override_pooler_config=None, disable_log_requests=False, max_log_len=None, disable_fastapi_docs=False, enable_prompt_tokens_details=False)

INFO 12-04 18:20:56 api_server.py:175] Multiprocessing frontend to use ipc:///tmp/bdb30880-0067-48b4-908c-68688ced64f4 for IPC Path.

INFO 12-04 18:20:56 api_server.py:194] Started engine process with PID 76

INFO 12-04 18:21:00 config.py:350] This model supports multiple tasks: {'generate', 'embedding'}. Defaulting to 'generate'.

INFO 12-04 18:21:00 config.py:1020] Defaulting to use mp for distributed inference

WARNING 12-04 18:21:00 arg_utils.py:1075] [DEPRECATED] Block manager v1 has been removed, and setting --use-v2-block-manager to True or False has no effect on vLLM behavior. Please remove --use-v2-block-manager in your engine argument. If your use case is not supported by SelfAttnBlockSpaceManager (i.e. block manager v2), please file an issue with detailed information.

INFO 12-04 18:21:03 config.py:350] This model supports multiple tasks: {'generate', 'embedding'}. Defaulting to 'generate'.

INFO 12-04 18:21:03 config.py:1020] Defaulting to use mp for distributed inference

WARNING 12-04 18:21:03 arg_utils.py:1075] [DEPRECATED] Block manager v1 has been removed, and setting --use-v2-block-manager to True or False has no effect on vLLM behavior. Please remove --use-v2-block-manager in your engine argument. If your use case is not supported by SelfAttnBlockSpaceManager (i.e. block manager v2), please file an issue with detailed information.

INFO 12-04 18:21:03 llm_engine.py:249] Initializing an LLM engine (v0.6.4) with config: model='/model-cache/Qwen/Qwen2.5-32B-Instruct', speculative_config=None, tokenizer='/model-cache/Qwen/Qwen2.5-32B-Instruct', skip_tokenizer_init=False, tokenizer_mode=auto, revision=None, override_neuron_config=None, tokenizer_revision=None, trust_remote_code=True, dtype=torch.bfloat16, max_seq_len=32768, download_dir=None, load_format=LoadFormat.AUTO, tensor_parallel_size=2, pipeline_parallel_size=1, disable_custom_all_reduce=False, quantization=None, enforce_eager=False, kv_cache_dtype=auto, quantization_param_path=None, device_config=cuda, decoding_config=DecodingConfig(guided_decoding_backend='outlines'), observability_config=ObservabilityConfig(otlp_traces_endpoint=None, collect_model_forward_time=False, collect_model_execute_time=False), seed=0, served_model_name=Qwen/Qwen2.5-32B-Instruct, num_scheduler_steps=1, chunked_prefill_enabled=False multi_step_stream_outputs=True, enable_prefix_caching=False, use_async_output_proc=True, use_cached_outputs=True, chat_template_text_format=string, mm_processor_kwargs=None, pooler_config=None)

WARNING 12-04 18:21:04 multiproc_gpu_executor.py:56] Reducing Torch parallelism from 112 threads to 1 to avoid unnecessary CPU contention. Set OMP_NUM_THREADS in the external environment to tune this value as needed.

INFO 12-04 18:21:04 custom_cache_manager.py:17] Setting Triton cache manager to: vllm.triton_utils.custom_cache_manager:CustomCacheManager

INFO 12-04 18:21:04 selector.py:135] Using Flash Attention backend.

(VllmWorkerProcess pid=348) INFO 12-04 18:21:04 selector.py:135] Using Flash Attention backend.

(VllmWorkerProcess pid=348) INFO 12-04 18:21:04 multiproc_worker_utils.py:215] Worker ready; awaiting tasks

(VllmWorkerProcess pid=348) INFO 12-04 18:21:05 utils.py:960] Found nccl from library libnccl.so.2

INFO 12-04 18:21:05 utils.py:960] Found nccl from library libnccl.so.2

(VllmWorkerProcess pid=348) INFO 12-04 18:21:05 pynccl.py:69] vLLM is using nccl==2.21.5

INFO 12-04 18:21:05 pynccl.py:69] vLLM is using nccl==2.21.5

INFO 12-04 18:21:06 custom_all_reduce_utils.py:204] generating GPU P2P access cache in /root/.cache/vllm/gpu_p2p_access_cache_for_0,1.json

INFO 12-04 18:21:17 custom_all_reduce_utils.py:242] reading GPU P2P access cache from /root/.cache/vllm/gpu_p2p_access_cache_for_0,1.json

(VllmWorkerProcess pid=348) INFO 12-04 18:21:17 custom_all_reduce_utils.py:242] reading GPU P2P access cache from /root/.cache/vllm/gpu_p2p_access_cache_for_0,1.json

INFO 12-04 18:21:17 shm_broadcast.py:236] vLLM message queue communication handle: Handle(connect_ip='127.0.0.1', local_reader_ranks=[1], buffer=<vllm.distributed.device_communicators.shm_broadcast.ShmRingBuffer object at 0x7f2cbfea6120>, local_subscribe_port=55433, remote_subscribe_port=None)

INFO 12-04 18:21:17 model_runner.py:1072] Starting to load model /model-cache/Qwen/Qwen2.5-32B-Instruct...

(VllmWorkerProcess pid=348) INFO 12-04 18:21:17 model_runner.py:1072] Starting to load model /model-cache/Qwen/Qwen2.5-32B-Instruct...

Loading safetensors checkpoint shards: 0% Completed | 0/17 [00:00<?, ?it/s]

Loading safetensors checkpoint shards: 6% Completed | 1/17 [00:00<00:08, 1.98it/s]

Loading safetensors checkpoint shards: 12% Completed | 2/17 [00:01<00:08, 1.72it/s]

Loading safetensors checkpoint shards: 18% Completed | 3/17 [00:01<00:08, 1.65it/s]

Loading safetensors checkpoint shards: 24% Completed | 4/17 [00:02<00:08, 1.62it/s]

Loading safetensors checkpoint shards: 29% Completed | 5/17 [00:03<00:07, 1.61it/s]

Loading safetensors checkpoint shards: 35% Completed | 6/17 [00:03<00:06, 1.59it/s]

Loading safetensors checkpoint shards: 41% Completed | 7/17 [00:04<00:06, 1.58it/s]

Loading safetensors checkpoint shards: 47% Completed | 8/17 [00:04<00:05, 1.57it/s]

Loading safetensors checkpoint shards: 53% Completed | 9/17 [00:05<00:05, 1.58it/s]

Loading safetensors checkpoint shards: 59% Completed | 10/17 [00:06<00:04, 1.57it/s]

Loading safetensors checkpoint shards: 65% Completed | 11/17 [00:06<00:03, 1.57it/s]

Loading safetensors checkpoint shards: 71% Completed | 12/17 [00:07<00:03, 1.59it/s]

Loading safetensors checkpoint shards: 76% Completed | 13/17 [00:08<00:02, 1.59it/s]

Loading safetensors checkpoint shards: 82% Completed | 14/17 [00:08<00:01, 1.60it/s]

Loading safetensors checkpoint shards: 88% Completed | 15/17 [00:09<00:01, 1.76it/s]

Loading safetensors checkpoint shards: 94% Completed | 16/17 [00:09<00:00, 1.71it/s]

(VllmWorkerProcess pid=348) INFO 12-04 18:21:27 model_runner.py:1077] Loading model weights took 30.7097 GB

Loading safetensors checkpoint shards: 100% Completed | 17/17 [00:10<00:00, 1.67it/s]

Loading safetensors checkpoint shards: 100% Completed | 17/17 [00:10<00:00, 1.63it/s]

INFO 12-04 18:21:28 model_runner.py:1077] Loading model weights took 30.7097 GB

(VllmWorkerProcess pid=348) INFO 12-04 18:21:31 worker.py:232] Memory profiling results: total_gpu_memory=79.11GiB initial_memory_usage=31.87GiB peak_torch_memory=34.53GiB memory_usage_post_profile=32.37Gib non_torch_memory=1.62GiB kv_cache_size=35.05GiB gpu_memory_utilization=0.90

INFO 12-04 18:21:31 worker.py:232] Memory profiling results: total_gpu_memory=79.11GiB initial_memory_usage=31.87GiB peak_torch_memory=34.53GiB memory_usage_post_profile=32.50Gib non_torch_memory=1.75GiB kv_cache_size=34.92GiB gpu_memory_utilization=0.90

INFO 12-04 18:21:31 distributed_gpu_executor.py:57] # GPU blocks: 17879, # CPU blocks: 2048

INFO 12-04 18:21:31 distributed_gpu_executor.py:61] Maximum concurrency for 32768 tokens per request: 8.73x

(VllmWorkerProcess pid=348) INFO 12-04 18:21:33 model_runner.py:1400] Capturing cudagraphs for decoding. This may lead to unexpected consequences if the model is not static. To run the model in eager mode, set 'enforce_eager=True' or use '--enforce-eager' in the CLI.

(VllmWorkerProcess pid=348) INFO 12-04 18:21:33 model_runner.py:1404] If out-of-memory error occurs during cudagraph capture, consider decreasing `gpu_memory_utilization` or switching to eager mode. You can also reduce the `max_num_seqs` as needed to decrease memory usage.

INFO 12-04 18:21:33 model_runner.py:1400] Capturing cudagraphs for decoding. This may lead to unexpected consequences if the model is not static. To run the model in eager mode, set 'enforce_eager=True' or use '--enforce-eager' in the CLI.

INFO 12-04 18:21:33 model_runner.py:1404] If out-of-memory error occurs during cudagraph capture, consider decreasing `gpu_memory_utilization` or switching to eager mode. You can also reduce the `max_num_seqs` as needed to decrease memory usage.

(VllmWorkerProcess pid=348) INFO 12-04 18:21:41 custom_all_reduce.py:224] Registering 4515 cuda graph addresses

INFO 12-04 18:21:41 custom_all_reduce.py:224] Registering 4515 cuda graph addresses

(VllmWorkerProcess pid=348) INFO 12-04 18:21:41 model_runner.py:1518] Graph capturing finished in 9 secs, took 0.39 GiB

INFO 12-04 18:21:41 model_runner.py:1518] Graph capturing finished in 9 secs, took 0.39 GiB

INFO 12-04 18:21:42 api_server.py:249] vLLM to use /tmp/tmpn3tmws97 as PROMETHEUS_MULTIPROC_DIR

INFO 12-04 18:21:42 launcher.py:19] Available routes are:

INFO 12-04 18:21:42 launcher.py:27] Route: /openapi.json, Methods: GET, HEAD

INFO 12-04 18:21:42 launcher.py:27] Route: /docs, Methods: GET, HEAD

INFO 12-04 18:21:42 launcher.py:27] Route: /docs/oauth2-redirect, Methods: GET, HEAD

INFO 12-04 18:21:42 launcher.py:27] Route: /redoc, Methods: GET, HEAD

INFO 12-04 18:21:42 launcher.py:27] Route: /health, Methods: GET

INFO 12-04 18:21:42 launcher.py:27] Route: /tokenize, Methods: POST

INFO 12-04 18:21:42 launcher.py:27] Route: /detokenize, Methods: POST

INFO 12-04 18:21:42 launcher.py:27] Route: /v1/models, Methods: GET

INFO 12-04 18:21:42 launcher.py:27] Route: /version, Methods: GET

INFO 12-04 18:21:42 launcher.py:27] Route: /v1/chat/completions, Methods: POST

INFO 12-04 18:21:42 launcher.py:27] Route: /v1/completions, Methods: POST

INFO 12-04 18:21:42 launcher.py:27] Route: /v1/embeddings, Methods: POST

INFO: Started server process [1]

INFO: Waiting for application startup.

INFO: Application startup complete.

INFO: Uvicorn running on http://0.0.0.0:80 (Press CTRL+C to quit)