gpu-operator

更新时间:2025年12月17日 15:34

浏览:192

官网说明

https://docs.nvidia.com/datacenter/cloud-native/gpu-operator/latest/gpu-operator-mig.html

gpu operator 包含了

- 设备扩展(nvidia-device-plugin)

- 节点信息发现(NFD)

- GPU信息发现(GFD)

- 驱动管理(nvidia-driver)

- 容器运行时管理(nvidia-container-toolkit)

- 显卡切分(nvidia-mig-manager)

等一系列组件

helm 安装 gpu operator

官网 helm 安装说明

https://docs.nvidia.com/datacenter/cloud-native/gpu-operator/latest/getting-started.html

helm repo add nvidia https://helm.ngc.nvidia.com/nvidia

helm repo update

helm install \

--wait \

--generate-name \

-n gpu-operator \

--create-namespace \

nvidia/gpu-operator \

--version=v25.3.1 \

--set driver.enabled=false \

--set mig.strategy=mixed

# --set driver.enabled=false \

# 跳过安装显卡驱动,适用于已预装了显卡驱动

# 删除

# helm delete -n gpu-operator $(helm list -n gpu-operator | grep gpu-operator | awk '{print $1}')

配置文件

custom-mig-config.yaml

apiVersion: v1

kind: ConfigMap

metadata:

name: custom-mig-config

data:

config.yaml: |

version: v1

mig-configs:

all-disabled:

- devices: all

mig-enabled: false

h200-149:

- devices: [4]

mig-enabled: true

mig-devices:

"2g.35gb": 2

"3g.71gb": 1

- devices: [5]

mig-enabled: true

mig-devices:

"1g.18gb": 2

"2g.35gb": 1

"3g.71gb": 1

- devices: [0,1,2,3,6,7]

mig-enabled: false

启用配置

kubectl apply -n gpu-operator -f custom-mig-config.yaml

kubectl patch clusterpolicies.nvidia.com/cluster-policy \

--type='json' \

-p='[{"op":"replace", "path":"/spec/migManager/config/name", "value":"custom-mig-config"}]'

kubectl label nodes h200-149 nvidia.com/mig.config=h200-149 --overwrite

查看配置

kubectl get node h200-149 -o=jsonpath='{.metadata.labels}' | jq .

kubectl describe node h200-149 | grep nvidia.com/mig

会自动给节点打上类似以下格式的标签

"nvidia.com/mig-1g.18gb.count": "2",

"nvidia.com/mig-1g.18gb.engines.copy": "1",

"nvidia.com/mig-1g.18gb.engines.decoder": "1",

"nvidia.com/mig-1g.18gb.engines.encoder": "0",

"nvidia.com/mig-1g.18gb.engines.jpeg": "1",

"nvidia.com/mig-1g.18gb.engines.ofa": "0",

"nvidia.com/mig-1g.18gb.memory": "16384",

"nvidia.com/mig-1g.18gb.multiprocessors": "16",

"nvidia.com/mig-1g.18gb.product": "NVIDIA-H200-MIG-1g.18gb",

"nvidia.com/mig-1g.18gb.replicas": "1",

"nvidia.com/mig-1g.18gb.sharing-strategy": "none",

"nvidia.com/mig-1g.18gb.slices.ci": "1",

"nvidia.com/mig-1g.18gb.slices.gi": "1",

"nvidia.com/mig-2g.35gb.count": "3",

"nvidia.com/mig-2g.35gb.engines.copy": "2",

"nvidia.com/mig-2g.35gb.engines.decoder": "2",

"nvidia.com/mig-2g.35gb.engines.encoder": "0",

"nvidia.com/mig-2g.35gb.engines.jpeg": "2",

"nvidia.com/mig-2g.35gb.engines.ofa": "0",

"nvidia.com/mig-2g.35gb.memory": "33280",

"nvidia.com/mig-2g.35gb.multiprocessors": "32",

"nvidia.com/mig-2g.35gb.product": "NVIDIA-H200-MIG-2g.35gb",

"nvidia.com/mig-2g.35gb.replicas": "1",

"nvidia.com/mig-2g.35gb.sharing-strategy": "none",

"nvidia.com/mig-2g.35gb.slices.ci": "2",

"nvidia.com/mig-2g.35gb.slices.gi": "2",

"nvidia.com/mig-3g.71gb.count": "2",

"nvidia.com/mig-3g.71gb.engines.copy": "3",

"nvidia.com/mig-3g.71gb.engines.decoder": "3",

"nvidia.com/mig-3g.71gb.engines.encoder": "0",

"nvidia.com/mig-3g.71gb.engines.jpeg": "3",

"nvidia.com/mig-3g.71gb.engines.ofa": "0",

"nvidia.com/mig-3g.71gb.memory": "71424",

"nvidia.com/mig-3g.71gb.multiprocessors": "60",

"nvidia.com/mig-3g.71gb.product": "NVIDIA-H200-MIG-3g.71gb",

"nvidia.com/mig-3g.71gb.replicas": "1",

"nvidia.com/mig-3g.71gb.sharing-strategy": "none",

"nvidia.com/mig-3g.71gb.slices.ci": "3",

"nvidia.com/mig-3g.71gb.slices.gi": "3",

"nvidia.com/mig.capable": "true",

"nvidia.com/mig.config": "h200-149",

"nvidia.com/mig.config.state": "success",

"nvidia.com/mig.strategy": "mixed",

"nvidia.com/mps.capable": "false",

"nvidia.com/vgpu.present": "false"

部署应用时,在 deployment 中指定 mig 显卡

resources:

requests:

nvidia.com/mig-3g.71gb: 1

limits:

nvidia.com/mig-3g.71gb: 1

显卡切分策略

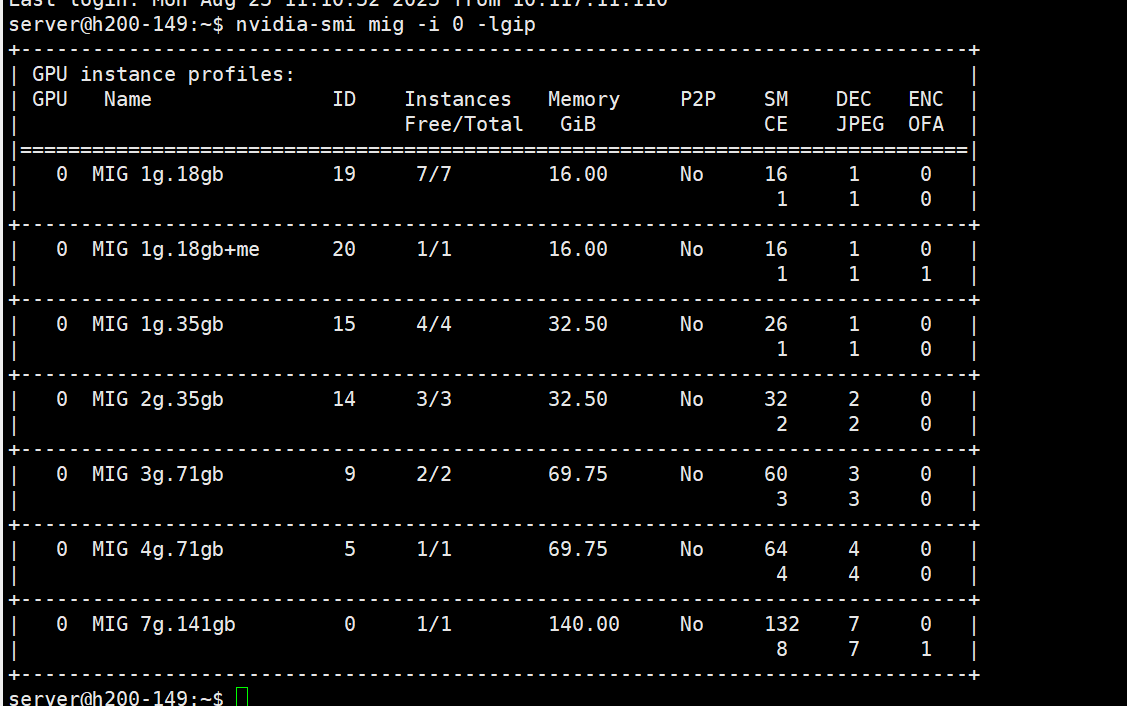

# 查看 0 号显卡支持的切分

nvidia-smi mig -i 0 -lgip

# 查看所有显卡

nvidia-smi mig -lgip

切分示例:

- “1g.18gb”: 2

- “2g.35gb”: 1

- “3g.71gb”: 1

切分原则:

- 简单计算 - 点号前面的 1g, 2g, 3g 表示几组计算单元,总和等于7或小于7即可,

- 精确计算 - 确保 各种资源(Memory/SM/CE/DEC/JPEG/ENC/OFA)加起来不超过总数(7g.141gb里的即总数)

常见问题

报错:

Failed to pull image "registry.k8s.io/nfd/node-feature-discovery:v0.17.3": failed to pull and unpack image "registry.k8s.io/nfd/node-feature-discovery:v0.17.3": failed to resolve reference "registry.k8s.io/nfd/node-feature-discovery:v0.17.3": failed to do request: Head "https://us-west2-docker.pkg.dev/v2/k8s-artifacts-prod/images/nfd/node-feature-discovery/manifests/v0.17.3": Service Unavailable

国外镜像拉不下来,使用 m.daocloud.io/ 中转

处理:

ctr -n k8s.io i pull m.daocloud.io/registry.k8s.io/nfd/node-feature-discovery:v0.17.3 && \

ctr -n k8s.io i tag m.daocloud.io/registry.k8s.io/nfd/node-feature-discovery:v0.17.3 registry.k8s.io/nfd/node-feature-discovery:v0.17.3